RockAI’s Yan 2.0: Rethinking Memory and Architecture in AI

Vivi Carter · 31, July 2025

The Unveiling: WAIC Witnesses a Disruptive Leap in Homegrown AI

At this year's World Artificial Intelligence Conference (WAIC) in Shanghai, a Chinese startup—RockAI—captured the spotlight. The buzz wasn’t just about another incremental upgrade, but the debut of Yan 2.0 Preview: a next-generation large model boasting native memory and a brand-new approach that boldly steps away from the reigning Transformer architecture.

Picture this: a robotic dog learns a new trick simply by watching a video demonstration, then immediately picks out its owner’s favorite drink after a single visual cue. Smart robotic arms not only interpret and act on human commands in real time but strategize and succeed in complex games, showing nuanced, near-human thinking. These demos, widely shared at WAIC and reported by local tech media, left industry veterans and the public equally stunned.

Rethinking the Foundations: Beyond Transformers

Why Move Away from Transformers?

For years, the Transformer architecture has governed the AI landscape—from language modeling to multimodality. Yet, concerns around its scalability, inefficiency, and context memory limitations have been growing. AI luminaries from CMU’s Albert Gu (proponent of the Mamba architecture, see “Palpable Progress in Sequence Modeling)” to Google’s Logan Kilpatrick and even Andrej Karpathy have openly discussed these issues.

Transformers, it turns out, aren’t universally optimal. Excessive computational demands and fundamental constraints on context length invite alternatives, and the tech world is ready to listen.

RockAI’s Distinct Path

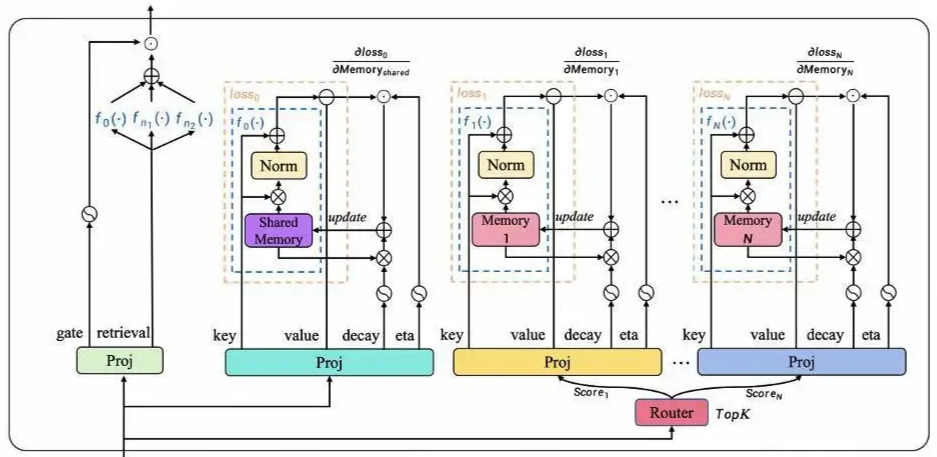

Enter RockAI’s Yan 2.0—distinct not just for being homegrown, but because it completely abandons Transformer mechanics. Instead, it leverages a proprietary architecture with a neural-native memory module, merging efficient storage, retrieval, and forgetting all within the model’s parameters.

With just 3 billion parameters, Yan 2.0’s performance rivals—sometimes surpasses—models like Llama 3 8B in key benchmarks. Yet, its true promise isn’t just performance: it’s about practicality and empowerment.

Real-time Learning & Native Memory: What Changed?

On-the-Fly, Multimodal Learning

Yan 2.0 Preview’s magic lies in its responsiveness. Demonstrate a new gesture to a robot dog or show it a specific beverage, and, instantly, it remembers and acts. There’s no cloud sync, database call, or API lag—just seamless, local learning.

This "native memory" is not an add-on. It’s a core feature, closely mirroring recent trends in memory-augmented neural networks, but implemented in a way that is both efficient and truly local to the device.

Implications Beyond the Demo Floor

Unlike most current-gen models, which require cloud access or storage tricks like RAG (Retrieval Augmented Generation), Yan 2.0’s memory lives inside the neural net. It’s not referencing an external database; it’s actually remembering. This means more intuitive, personalized interactions—the model isn’t just answering your next question, it’s starting to understand you.

Offline, On-Device: Real AI at the Edge

Why Edge AI Is the Next Battleground

The industry has long promised AI “everywhere,” but cloud dependencies and efficiency bottlenecks have made it elusive. Yan 2.0 sidesteps this: its architecture is so slim that full fidelity models can be deployed on consumer hardware—robots, smartphones, even devices with only 8GB RAM.

Performance metrics so far (based on RockAI demo stats and early hands-on by 国内科技媒体):

- Xiaomi 13: 18 tokens/sec

- Redmi K50: 12 tokens/sec

- T-phone: 7-8 tokens/sec

On robotics hardware like Intel i7s or NVIDIA Jetson, multimodal perception and control run local and smooth. This means faster response, lower latency, and—crucially for privacy-conscious users—local data security, free from the risks of cloud computing.

Accessibility and Inclusivity

RockAI’s core mission: “A brain for every device.” In practical terms, that’s localized intelligence for users beyond big cities or privileged demographics—children, seniors, everyone, everywhere.

The Broader Shift: AI with True Memory

From Patchwork AI to Genuine Learning Partners

Traditional models store knowledge externally—plug-ins, cloud APIs, or on-the-fly retrieval—offering impressive but fragmented intelligence. Native memory changes this. Now, AI can develop real preferences and adapt over time, recalling your prior choices and style.

This moves AI from a tool to something closer to a companion. The user no longer needs to start from scratch in every interaction; the AI can anticipate needs and react with true personalization, representing a qualitative leap in human-machine relationships.

Privacy and Speed: The Twin Advantage of On-Device Memory

With everything processed locally, not only is privacy protected, but user experience is transformed through snappier responses and resilience to connectivity issues—a vision in line with evolving global expectations for privacy-centered AI (see Mozilla’s privacy AI guidelines).

Looking Forward: Will the Age of Edge AI Be Made in China?

RockAI’s commitment to non-Transformer architectures is more than a technical choice—it’s a philosophical stance. As big tech players experiment with smart glasses, brain-machine interfaces, and other bold forms of AI hardware, RockAI is betting on operating systems that integrate directly with next-generation local AI models.

Their argument is compelling: If AI is to become a “brain” for the billions of smart devices scattered across the globe, it must be frugal, nimble, and natively smart. Even today, Yan’s 3B model can handle translation, meeting summaries, and more right out of the box—no cloud required.

Relevant Resources