No-Code Meets LLMs: 6 Tools for Smart AI Workflows

.webp)

Eric Walker · 14, July 2025

The rapid progress of large language models (LLMs) has created a boom in tools that empower developers and non-developers alike to build intelligent systems. But while building applications around LLMs used to require a blend of engineering skill and AI intuition, the tide is shifting—no-code and low-code platforms are lowering the barrier to entry dramatically.

In this post, we’ll explore 6 open-source no-code tools that let you build with LLMs, agents, and Retrieval-Augmented Generation (RAG) systems—without touching Python. These tools support drag-and-drop interfaces, multimodal inputs, plugin architectures, and smart memory management, letting you focus on what the AI does, not how it’s wired under the hood.

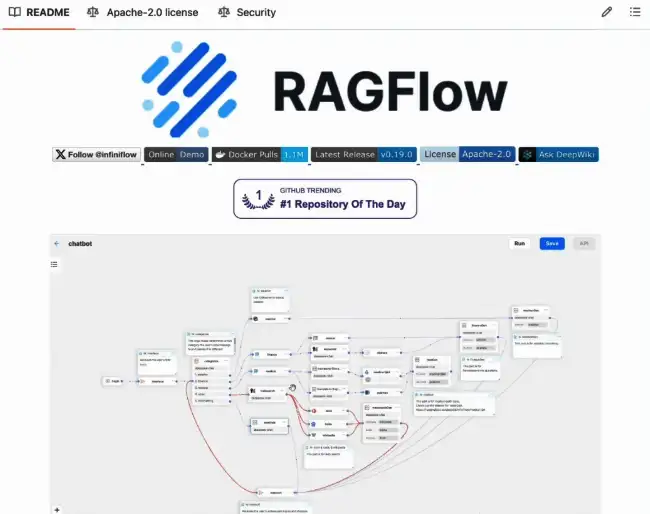

RAGFlow – Build Enterprise-Grade RAG Workflows

RAGFlow is a no-code framework tailored for deep document analysis using Retrieval-Augmented Generation. Designed for enterprise contexts, it supports complex document structures, integrates network search, and provides reliable source citations. It also embraces multimodal understanding—so you can mix text, images, and structured data in your workflows.

Whether you’re building a legal document summarizer, financial report analyzer, or cross-domain knowledge agent, RAGFlow lets you do it all without writing custom code.

xpander – Backend for Building Smart, Stateful Agents

Xpander is a backend platform that helps you build, manage, and deploy LLM-based agents. What makes it stand out is its framework-agnostic design—it works with LlamaIndex, CrewAI, and other agent ecosystems, managing everything from tool integration and event flow to user state and memory.

Its intuitive interface lets users create multi-agent systems with complex reasoning abilities, complete with guardrails for safer deployments. Ideal for those who want to explore agentic AI with minimal engineering overhead.

TransformerLab – A Playground for Training and Experimenting With LLMs

TransformerLab is both an app and a toolkit for experimenting with LLMs. With a sleek drag-and-drop UI, users can set up Retrieval-Augmented Generation (RAG) workflows, fine-tune open-source models like DeepSeek or Gemma, and even log experiments—all from a single interface.

This tool is designed for AI researchers and tinkerers who want fast iteration cycles without being bogged down by scripts and CLI tools. TransformerLab blends flexibility with accessibility, making it a solid choice for prototyping LLM-powered applications.

LLaMA-Factory – Fine-Tune 100+ Open-Source Models With Zero Code

LLaMA-Factory supports one-click training and fine-tuning of more than 100 open-source LLMs and vision-language models (VLMs). It’s compatible with advanced tuning methods like PPO (Proximal Policy Optimization) and DPO (Direct Preference Optimization) and provides built-in experiment tracking.

Best of all, you don’t need to write a single line of Python to get started. The platform is perfect for anyone who wants to adapt open models to specific domains, from legal and healthcare to customer service and education.

Langflow – Drag-and-Drop AI Agent Builder

Langflow offers a clean visual interface for building AI agents and workflows. Inspired by tools like LangChain but far more accessible, it lets users visually define how data flows between components such as vector stores, prompts, APIs, and LLMs.

You can prototype and deploy complex AI pipelines using nothing but your mouse, making it an excellent tool for product teams and technical marketers looking to ship AI features fast.

AutoAgent – Speak to Build: Natural Language for Agent Creation

AutoAgent goes one step further by allowing you to create and deploy AI agents using natural language commands. It supports modern agent interaction patterns like ReAct (which combines reasoning and action) and function calling.

This tool is aimed at non-technical users who want to create tailored virtual assistants or autonomous agents without diving into LLM prompts or Python scripts. Think of it as ChatGPT for building ChatGPT-like agents.

Rethinking Time Series Forecasting: LLMs Move Beyond Pattern Matching

While LLMs have proven adept at understanding language, their application to time series forecasting (TSF) has remained underexplored. Traditional TSF methods typically rely on “fast-thinking” paradigms—models learn patterns in historical data and directly extrapolate them into the future. But this often results in black-box predictions with little interpretability or reasoning.

A recent paper titled “Time Series Forecasting as Reasoning: A Slow-Thinking Approach with Reinforced LLMs” (arXiv link) proposes a more deliberate, reasoning-oriented alternative: Time-R1, a two-phase system that reimagines how LLMs can be trained for TSF.

Inside Time-R1: A Two-Phase Reinforcement Tuning Framework

The researchers behind Time-R1 use a hybrid tuning strategy called Reinforced Fine-Tuning (RFT). It unfolds in two distinct stages:

Warm-Up with Supervised Fine-Tuning (SFT)

In this phase, the LLM is fine-tuned using synthetic Chain-of-Thought (CoT) samples designed to teach the model how to break down time series patterns and reason toward a forecast. These samples include step-by-step reasoning and structured outputs to condition the model to follow a logical, interpretable path.

The goal: help the LLM internalize not just what to predict, but how to get there.

Reinforcement Learning with Custom Rewards

Once the model can simulate reasoning patterns, reinforcement learning kicks in. A sophisticated reward function scores each output across multiple dimensions—format correctness, forecast accuracy, structural similarity to expected reasoning paths, and more.

Time-R1 introduces a novel technique called GRIP (Group-based Relative Importance Prioritization) to fine-tune how rewards are weighted, ensuring the model improves on what matters most—whether that’s narrative coherence or numerical precision.

.webp)

Results That Speak Volumes

In tests across nine diverse datasets, Time-R1 outperformed both classic statistical models and LLM baselines in forecasting accuracy and interpretability. This shows that giving LLMs a structured way to “think slowly” can unlock their reasoning potential even in numeric, non-linguistic domains.

It’s a meaningful step forward, especially as sectors like finance, climate modeling, and supply chain logistics increasingly look to AI for smarter, more explainable forecasting.

📈 In related news, Microsoft recently introduced a hybrid forecasting approach combining transformers and Kalman filters for energy demand prediction—highlighting growing interest in more robust time series AI models. [1]

AI is Getting Smarter—and More Accessible

The landscape of AI development is changing. With tools like RAGFlow and Langflow, non-coders can now build sophisticated AI systems, while research like Time-R1 shows that LLMs can move beyond static predictions to structured reasoning.

Whether you’re a startup founder, enterprise technologist, or AI enthusiast, these tools and methods open up powerful new possibilities—without requiring a PhD in machine learning or fluency in Python.

As AI continues to evolve, the line between developer and domain expert will only blur further. And that might be exactly what we need to build systems that are not only smart—but also usable, trustworthy, and creative.

Free AI models available on GlobalGPT, an all-in-one AI platform.