Gemini Robotics: Why Local AI Matters

.webp)

Eric Walker · 9, July 2025

Google DeepMind’s Gemini Robotics On-Device takes the brains of a multimodal foundation model and stuffs them into the robot itself. That leap could cut network cords, shrink costs, and, most importantly, let robots respond in human-level timeframes. The next step is clear: turn today’s impressive lab demos into tomorrow’s everyday tools.

A quiet but consequential launch

On June 24, 2025, Google DeepMind slipped out a seemingly modest news item: a compact variant of its multimodal Gemini Robotics model that can run entirely on a robot’s own hardware, no data-center required. Dubbed Gemini Robotics On-Device, the new vision-language-action (VLA) model is small enough to live on the edge, yet—according to Google—maintains performance close to its cloud-backed sibling.

Why does that matter? Because local autonomy eliminates the two biggest pain points in real-world robotics: latency and connectivity. Millisecond-level reaction times and guaranteed privacy are suddenly possible even in a factory basement or a disaster zone with no Wi-Fi.

What exactly is Gemini Robotics On-Device?

In DeepMind’s own words, On-Device is “our most powerful VLA model optimized to run locally on robotic devices.” The company claims it preserves the original Gemini Robotics model’s broad dexterity and task generalization while shrinking the compute footprint enough to fit on a single NVIDIA Jetson-class board.

Key design goals include:

- Low-latency inference for real-time control

- Energy efficiency so mobile robots aren’t tethered to outlet-hopping

- Rapid fine-tuning—as few as 50–100 demonstrations can adapt the model to a brand-new task or embodiment

Those choices hint at DeepMind’s strategy: rather than ship a one-size-fits-all agent, give developers a flexible foundation model they can mold to their own hardware and workflows.

Almost-flagship performance, offline

Third-party journalists who previewed the system confirm that the local model’s hit in accuracy and speed is surprisingly small. Carolina Parada, DeepMind’s head of robotics, told The Verge that offline performance is “almost as good as the flagship,” positioning it as an entry-level option for bandwidth-constrained or security-sensitive deployments.(theverge.com)

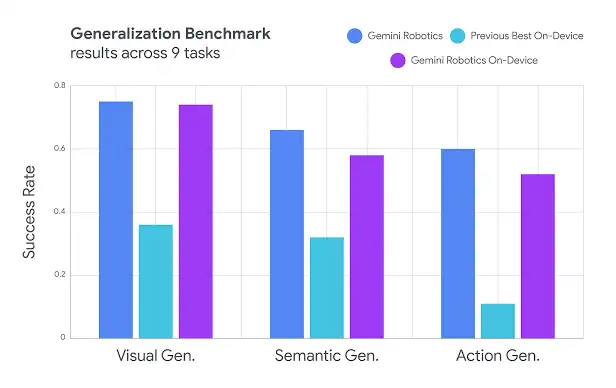

That claim is backed by DeepMind’s internal benchmarks: On-Device outperformed every previous edge VLA on unseen, multi-step tasks while staying within a few percentage points of the cloud-scale Gemini Robotics model.(deepmind.google)

Free Gemini available on

GlobalGPT, an all-in-one AI platform.

Gemini API

available at Google AI Studio.

One brain, many bodies

In demos, the team started with its in-house ALOHA research robot but quickly ported the same weights to:

- Franka FR3—a widely used dual-arm manipulator in industrial R&D

- Apptronik’s Apollo—a 5-foot-8 humanoid designed for warehouse work

The impressive part isn’t just cross-platform deployment; it’s that no retraining from scratch was needed. A few dozen task-specific demonstrations let each robot fold clothes, zip lunchboxes or execute belt-assembly with objects it had never “seen” before.(techcrunch.com, theverge.com)

Fast-track fine-tuning and an SDK for everyone

Gemini Robotics On-Device is also the first DeepMind VLA to ship with an official SDK. The toolkit bundles a MuJoCo simulator, data-collection scripts and recipes for low-shot adaptation—helping teams jump from simulation to hardware in days, not months. Google says the SDK will initially roll out to “trusted testers,” but the company rarely keeps new AI tools private for long.(theverge.com, techcrunch.com)

If history repeats, expect a cadence similar to TensorFlow’s early years: private preview, open-beta, then fully open source once the model is hardened and safety-audited.

Why local AI is a very big deal

Running large models on the edge isn’t just a technical flex; it reshapes the entire value proposition of robotics:

- Latency & Jitter

Cloud round-trips of 100 ms sound fine for chatbots, but a robot dodging a forklift needs sub-20 ms reflexes.

- Security, Privacy, Sovereignty

Hospitals, defense contractors and national infrastructure rarely allow continuous outbound streams. Local inference keeps sensitive camera feeds in-house.

- Cost and Scalability

Companies pay twice for cloud robotics: compute rentals

and high-bandwidth networking. Local models cut both bills.

Even fintech-focused outlets like PYMNTS framed Google’s launch as an answer to “latency sensitive applications” where sporadic connectivity is the norm.(pymnts.com)

The broader shift from cloud AI to edge AI

Gemini Robotics On-Device is part of a larger trend: foundation models are migrating from the data center to the device. We’re watching the same pattern play out with smartphones (Gemini Nano on Pixel), cars (Gemini in Android Automotive), and now robots.(cryptopolitan.com)

Why now?

- Hardware progress – NVIDIA’s Orin and Qualcomm’s Oryon deliver laptop-class FLOPS in a few watts.

- Model compression – Techniques like low-rank adaptation and quantization shrink 30-billion-parameter networks to single-digit billions without catastrophic forgetting.

- Business pressure – Enterprises want predictable OpEx and regulatory clarity that cloud AI can’t always guarantee.

Competitive pressures: no one wants to miss the robotics wave

DeepMind isn’t alone. TechCrunch notes that Nvidia, Hugging Face and a slate of well-funded startups are racing to build their own robotics foundation models.(techcrunch.com)

OpenAI’s “agentic” roadmap and Boston Dynamics’ Spot with code-assist all point to the same destination: robots that reason locally and collaborate globally.

In other words, the platform war that played out on phones (iOS vs. Android) is coming to robotics—only this time, the stakes involve physical safety, not just screen time.

Near-term use cases

- E-commerce fulfillment – Offline dexterity turns dumb picking arms into collaborative coworkers that don’t freeze whenever Wi-Fi hiccups.

- Home assistance – Folding laundry or setting the table demands nuanced manipulation in cluttered, connectivity-spotty homes.

- Field inspection – Wind-turbine or pipeline robots can’t count on 5G at the top of a tower or underground.

- Medical logistics – Hospital privacy rules often ban cloud streaming; local AI sidesteps HIPAA snarls.

Because Gemini Robotics On-Device can adapt to new tasks with only dozens of demos, small organizations can prototype niche workflows without a Ph.D. in reinforcement learning.

Open questions and challenges

- Hardware standardization – Jetson-class SoCs are plentiful, but thermal envelopes vary wildly across robot designs.

- Battery drain – Even efficient inference burns watts; mobile platforms must balance compute cycles against mission duration.

- Safety guardrails – DeepMind touts “holistic safety” and recommends red-teaming every embodiment. Yet external auditors will want more than benchmarks before robots roam public spaces.(deepmind.google)

- Developer ecosystem – Will Google fully open-source weights and training scripts, or keep a proprietary choke point?

How these issues resolve will shape whether On-Device becomes the Android of robotics—or another closed, single-vendor stack.

What happens next?

If Gemini Robotics On-Device delivers on even half its promises, 2026 could mark the year edge AI finally unlocks mainstream robotics, just as low-cost GPS unlocked the smartphone explosion two decades ago.

The most interesting metric to watch isn’t benchmark accuracy; it’s time to first useful demo for small teams. If a three-person startup can teach an off-the-shelf arm to assemble IKEA furniture in a weekend, an entirely new class of consumer and industrial products will follow.