Gemini CLI vs Claude Code: Which AI Terminal Wins?

.webp)

Eric Walker · 17, July 2025

AI coding assistants have been on fire throughout 2024. Following the breakout success of startup darling Cursor, we've witnessed a cascade of releases from tech giants: Anthropic launched Claude Code in February, OpenAI unveiled Codex CLI in April, and just days ago, Google officially introduced Gemini CLI to the party.

As an open-source AI agent designed to run directly in your terminal (licensed under Apache 2.0), Google's Gemini CLI allows developers to interact with AI using natural language commands. The reception has been explosive—it racked up 15.1k GitHub stars in just 24 hours.

Four Key Advantages That Set Gemini CLI Apart

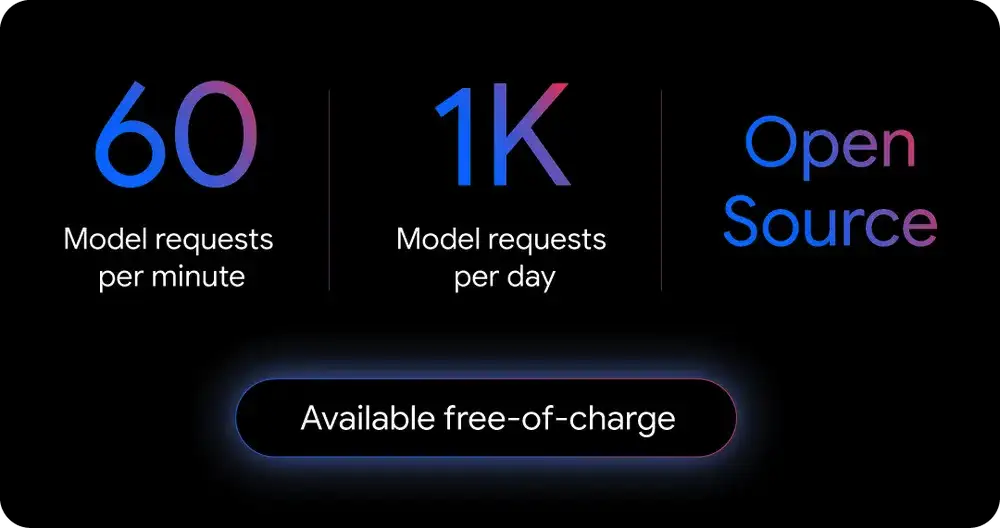

Open source and free: Gemini CLI connects to Google's free, top-tier Gemini 2.5 Pro model. Simply log in with your personal Google account to access Gemini Code Assist. During the preview period, you get up to 60 requests per minute and 1,000 requests daily—completely free.

Multi-task framework: The tool handles everything from programming and file management to content generation, script control, and deep research. While it excels at various tasks, coding remains its strongest suit.

Lightweight design: Developers can embed it directly into terminal scripts or use it as an autonomous agent.

Massive context window: With a context length of up to 1 million tokens, it can process entire codebases for smaller projects in a single pass.

This raises an important question: with a powerful model backing it and a free tier, how does Gemini CLI stack up against the previously popular Claude Code? Let's dive deep into this terminal AI showdown.

Why Terminal AI Tools Are Suddenly Taking Off

From Clicking to Conversing: A Productivity Revolution

Traditional IDEs, while feature-rich, suffer from a fundamental pain point: overly complex workflows. Want to refactor a function? Get ready for multiple steps, menu navigation, and configuration tweaks.

Terminal AI tools completely transform this paradigm. Through a unified conversational interface, they simplify all operations into natural language descriptions. No more memorizing complex command syntax—just type "refactor this function to improve readability" and watch the magic happen.

This shift represents a fundamental abstraction: complex technical operations become natural language interactions, allowing developers to focus on business logic rather than tool mechanics.

Claude Code vs Gemini CLI: A Deep Dive into Core Differences

Context Length: The Memory Game

Context window size represents the most critical difference between these tools:

Gemini CLI: Supports 1 million tokens (approximately 750,000 words), with potential expansion to 2 million tokens Claude Code: Supports 200,000 tokens (approximately 150,000 words)

What does this mean in practice? Longer context enables models to reference more input content when generating responses—crucial for lengthy conversations, complex tasks, or comprehensive document understanding. In multi-turn dialogues, extended context maintains conversational coherence. Put simply: the model has a better memory. With 1 million tokens, Gemini CLI can digest entire codebases for smaller projects.

Cost Structure: Free vs Paid

Gemini CLI's generous free tier:

- 1,000 free requests daily

- Up to 60 requests per minute

- Perfect for individual developers and small teams

Claude Code's subscription model:

- Pay-as-you-go pricing based on usage

- Higher usage means higher costs

- Includes enterprise-grade security and professional support

- Ideal for commercial projects with strict quality requirements

Performance Benchmarks

Based on aggregated independent evaluations, here's how they compare across key metrics:

| Metric | Gemini CLI | Claude Code |

| Code Generation Speed | 8.5/10 | 9.0/10 |

| Accuracy | 9.0/10 | 8.8/10 |

| Context Retention | 9.5/10 | 8.0/10 |

| Multi-language Support | 8.8/10 | 9.2/10 |

| Overall Performance | 8.95/10 | 8.75/10 |

Platform Support: Accessibility Matters

Gemini CLI demonstrates excellent cross-platform support from day one, offering full compatibility with Windows, macOS, and Linux. This broad compatibility provides significant advantages in diverse development environments and enterprise deployments.

Claude Code initially focused optimization efforts on macOS. While technically capable of running on other platforms, its core functionality and user experience priorities remain centered on the macOS ecosystem—potentially limiting adoption in Windows-dominated enterprise environments.

Authentication and Access Models

Claude Code: Access tied to Anthropic's paid subscriptions (Pro, Max, Team, or Enterprise) or API usage through AWS Bedrock/Vertex AI.

Gemini CLI: Offers an extremely generous free tier for individual users with personal Google accounts, providing 1,000 daily requests to the full-featured Gemini 2.5 Pro model (60 per minute). Professional users requiring higher limits or specific models can upgrade to paid tiers via API keys.

Free Gemini and Claude available on GlobalGPT, an all-in-one AI platform.

The Verdict: Choose Based on Your Needs

Both tools represent significant advances in AI-assisted development, but they serve different audiences:

Choose Gemini CLI if you:

- Work on personal projects or small team initiatives

- Need to process large codebases or maintain extensive context

- Prefer open-source solutions with community support

- Want immediate access without subscription commitments

- Require cross-platform compatibility

Choose Claude Code if you:

- Work in enterprise environments requiring dedicated support

- Prioritize cutting-edge code generation speed

- Need specialized features for commercial development

- Already use Anthropic's ecosystem

- Primarily develop on macOS

The terminal AI revolution is just beginning. As these tools mature and competition intensifies, developers are the ultimate winners. Whether you choose Google's open approach or Anthropic's premium offering, one thing is clear: natural language interaction with development tools is no longer the future—it's the present.

Recent developments suggest this space will continue evolving rapidly. Microsoft's GitHub Copilot CLI entered public beta in November 2024¹, while Meta announced plans for their own terminal AI assistant in early December². The proliferation of these tools signals a fundamental shift in how we interact with development environments.

As we move into 2025, expect to see even more sophisticated features, better integration with existing workflows, and perhaps most importantly, a continued push toward making powerful AI assistance accessible to developers at every level.