FLUX.1 Kontext Review: Open 12B Model for Image Editing

.webp)

Eric Walker · 26, July 2025

On June 26, 2025, Black Forest Labs released FLUX.1 Kontext [dev], an open-weights, 12-billion-parameter rectified-flow transformer focused on image editing. Until now, top-tier editing has largely lived behind closed APIs; Kontext [dev] pushes many of those capabilities into a downloadable model that runs on consumer hardware and plugs into the tools creative teams already use.

What’s new — and why it matters

Kontext [dev] brings proprietary-level editing into an open-weights package under BFL’s FLUX.1 [dev] Non-Commercial License. Out of the box it supports ComfyUI, Hugging Face Diffusers, and TensorRT, which means you can test it locally, wire it into batch jobs, or stand it up behind your own API without re-plumbing your stack.

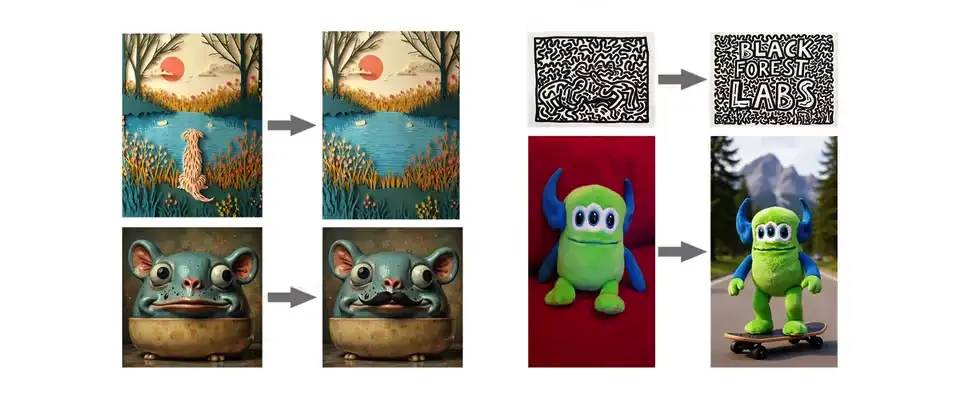

Key capabilities (as tested in the tech report and model card)

- Instruction-based editing of existing images (local and global changes).

- Character, style, and object reference without extra fine-tuning, designed to reduce “visual drift” across multi-step edits.

- Guidance-distilled training for efficiency and faster iteration.

- These are the headline behaviors you feel immediately in an editing workflow.

Results I looked for (and where to verify them)

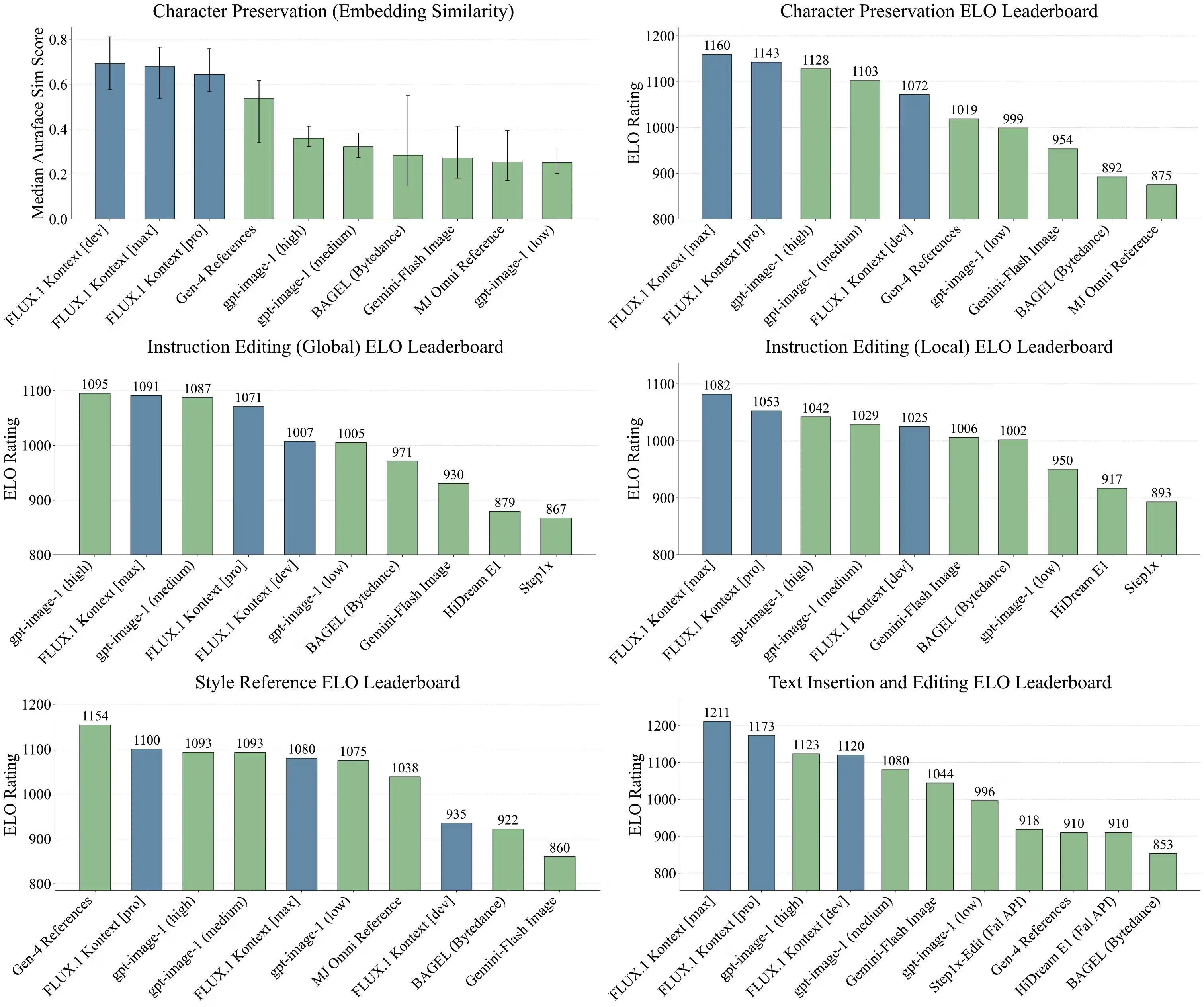

BFL evaluated Kontext on KontextBench, a new, public benchmark of 1,026 image–prompt pairs spanning five categories: local editing, global editing, character reference, style reference, and text editing. In human-preference tests, Kontext [dev] outperformed strong open editors (e.g., Bytedance Bagel, HiDream-E1-Full) and a closed model (Google Gemini-Flash Image) across many categories. BFL says this is corroborated by Artificial Analysis’s independent runs. You can check the benchmark split and categories on the HF dataset page and the methodology in the tech report.

Method, briefly (from the technical report)

Kontext is a flow-matching model that unifies generation and editing with a simple sequence-concatenation scheme for text and image inputs. The design explicitly targets multi-turn consistency (keeping characters and objects stable as you iterate) while cutting generation time to enable interactive use.

Performance & hardware notes

If you have access to NVIDIA’s Blackwell generation, BFL provides TensorRT-optimized weights in BF16/FP8/FP4 that boost throughput and reduce VRAM footprint while maintaining quality. That optimization shipped day-one alongside the standard weights.

Free Flux available on GlobalGPT, an all-in-one AI platform.

Ecosystem, tooling, and how to try it

- Weights & model card: Hugging Face (black-forest-labs/FLUX.1-Kontext-dev).

- Reference inference code: BFL’s official GitHub repo (includes a usage-tracking path if you later buy a self-hosted commercial license).

- APIs/hosted runs: BFL and partners (FAL, Replicate, Runware, DataCrunch, Together AI) expose endpoints for quick experiments.

- Pipelines: Ready in Diffusers and available as a ComfyUI node/graph, so you can compose edits with other operators.

Licensing you should actually read

- Non-commercial by default: Kontext [dev] is released under the FLUX.1 [dev] Non-Commercial License for research and non-commercial use.

- Outputs: The model card explicitly notes that generated outputs may be used for personal, scientific, and commercial purposes as described in that license (a nuance many teams miss).

- Commercial path: BFL now offers a self-serve licensing portal to convert open-weights usage into a commercial deployment with usage tracking.

- Safety & provenance: The model card and announcement detail required filters/manual review for certain content and note C2PA provenance in the API tier.

- Read the terms before shipping.

Where KontextBench helps (and where it doesn’t yet)

Having a public 1,026-pair benchmark is a solid start for apples-to-apples comparisons across edit types (text edits, style transfer, character preservation). It won’t replace your product’s QA matrix, but it gives teams a reproducible way to validate multi-turn stability and semantic fidelity before investing in workflows or guardrails.

What to watch

- External head-to-heads: Artificial Analysis and similar trackers are adding Kontext runs to their dashboards; keep an eye on those for neutral latency/quality comparisons as more models update.

- License compliance in the wild: Because outputs can be used commercially (subject to the license), expect increased scrutiny of filtering and provenance requirements in open deployments.

Verdict

If you’ve been waiting for an open-weights editor that holds up under multi-turn, reference-heavy workflows, Kontext [dev] is the first broadly accessible model that feels like the closed systems — with the bonus of local control and a maturing licensing path. For creative teams, product photo pipelines, and anyone who needs repeatable edits with minimal drift, it’s absolutely worth piloting alongside your current stack.

Relevant Resources