AI Coding Tools Make Devs 19% Slower Despite Feeling Faster

.webp)

Eric Walker · 16, July 2025

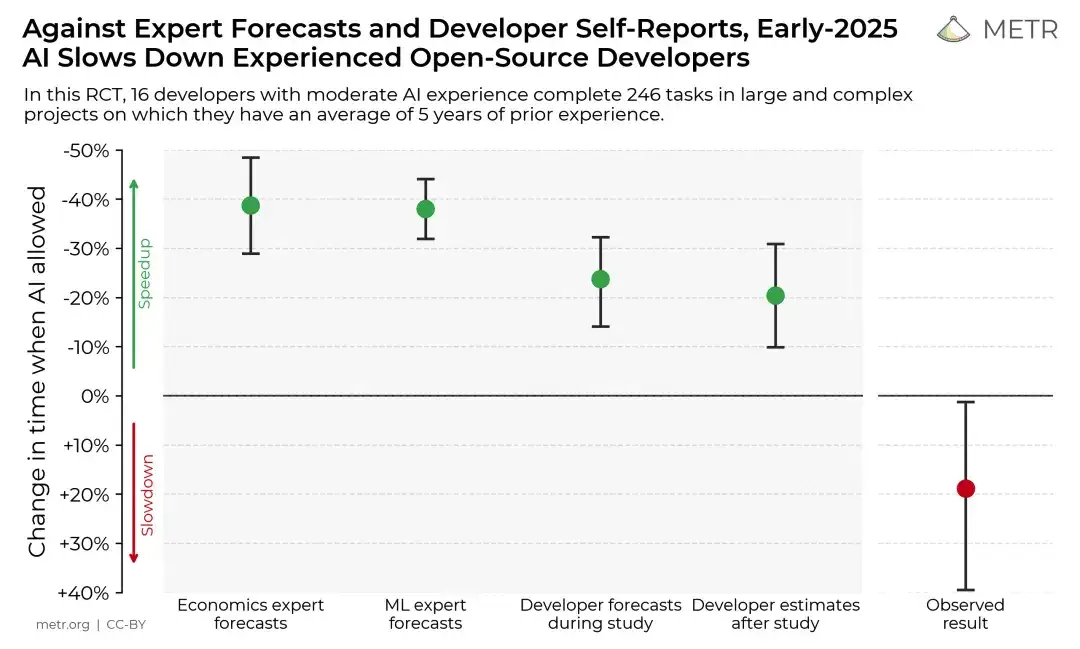

The promise of AI-powered coding assistants has been one of tech's most compelling narratives. From GitHub Copilot to Cursor Pro, these tools have been marketed as productivity game-changers. But a groundbreaking study from METR (Model Evaluation & Threat Research) has revealed a startling truth: experienced developers using AI coding tools are actually 19% slower, even while believing they're 20% faster.

This isn't just another tech study—it's a reality check for an industry betting billions on AI-augmented development.

The Study That Shocked Silicon Valley

METR's randomized controlled trial, published in July 2025, examined how early-2025 AI tools affect experienced open-source developers working on their own repositories. The methodology was rigorous: 16 seasoned developers from major open-source projects tackled 246 real-world coding tasks, with participants randomly assigned to work with or without AI assistance.

The setup was designed to mirror real-world conditions. Developers used popular AI coding assistants like Cursor Pro with Claude 3.5/3.7 Sonnet, working on their actual projects rather than artificial test scenarios. Tasks ranged from bug fixes to feature implementations, each estimated to take 1-2 hours.

The Perception-Reality Gap

Here's where it gets fascinating. Before starting their tasks, developers predicted AI tools would reduce completion time by 24%. After finishing, they estimated AI had saved them 20% of their time. The reality? They were 19% slower when using AI assistance.

This 39-percentage-point gap between perception and reality represents one of the most significant cognitive disconnects documented in software engineering research. As one researcher noted, "Developers felt more productive with AI, but they were actually less productive".

Breaking Down the Paradox

Why Do Developers Feel Faster?

The psychological factors at play are complex. When AI generates code instantly, it creates a sensation of speed and efficiency. Developers report feeling "in the flow" as they accept, modify, or reject AI suggestions. This constant stream of automated assistance masks the actual time spent on:

- Reviewing and understanding AI-generated code

- Debugging subtle errors introduced by AI

- Refactoring to match project conventions

- Context-switching between writing and reviewing modes

According to Simon Willison's analysis, "The most interesting finding is that developers felt they were more productive with AI, but they were actually less productive".

The Hidden Time Sinks

METR's detailed analysis revealed several factors contributing to the slowdown:

- Quality Control Overhead: AI-generated code often looks correct at first glance but contains subtle bugs or doesn't fully align with project architecture

- Context Loading: Time spent explaining project context to AI tools

- Decision Fatigue: Constantly evaluating AI suggestions disrupts deep focus

- Over-reliance: Developers sometimes accepted suboptimal AI solutions rather than implementing better approaches

Implications for the Industry

Rethinking AI Integration

This study challenges the tech industry's most bullish AI narrative. As reported by TechCrunch, "AI coding tools may not offer productivity gains for experienced developers"—a finding that could reshape how companies approach AI adoption.

The results suggest we need a more nuanced understanding of where AI adds value. Rather than universal productivity boosters, these tools might be better suited for specific scenarios:

- Junior developers learning new frameworks

- Boilerplate code generation

- Documentation and test writing

- Code review assistance

The Learning Curve Factor

Several analysts have pointed out that the study's timeframe might not capture long-term adaptation effects. As developers become more skilled at prompting and integrating AI suggestions, productivity gains might emerge. However, METR's researchers designed the study to minimize this factor by using developers already familiar with AI tools.

Free Claude available on GlobalGPT, an all-in-one AI platform.

What This Means for Developers

Recalibrating Expectations

For individual developers, these findings offer important lessons:

- Trust but Verify: Your perception of productivity might not match reality

- Measure Actual Output: Track completed tasks, not just time spent coding

- Strategic Use: Deploy AI for appropriate tasks rather than universal application

- Maintain Core Skills: Don't let AI assistance atrophy fundamental programming abilities

The Path Forward

The study doesn't suggest abandoning AI tools—rather, it calls for more thoughtful integration. As the Atlassian 2025 Developer Experience Report notes, "AI adoption is rising, but friction persists."

Future research should explore:

- Which specific tasks benefit most from AI assistance

- How to optimize human-AI collaboration workflows

- Long-term effects of AI tool usage on code quality

- Differences across programming languages and domains

A Reality Check, Not a Rejection

METR's study serves as a crucial reality check for an industry often swept up in AI hype. The 19% productivity decrease among experienced developers using AI tools—contrasted with their belief in a 20% improvement—highlights the importance of rigorous measurement over subjective impressions.

This doesn't mean AI coding assistants are worthless. Instead, it suggests we're still in the early stages of understanding how to effectively integrate these tools into professional workflows. The future likely holds more sophisticated AI systems and better integration strategies, but for now, experienced developers might want to approach these tools with measured expectations.

As we navigate this AI-augmented future, one thing is clear: feeling productive and being productive are two very different things. The challenge for developers and organizations alike is to bridge that gap with data-driven insights rather than wishful thinking.