CrewAI: 34k-Star Open-Source Framework for Multi-Agent AI

.webp)

Eric Walker · 22, July 2025

Over the past two years, “agent” has gone from sci-fi trope to software staple. Developers now expect models to reason, delegate and coordinate the way teams do. Among the dozens of frameworks chasing that promise, CrewAI has vaulted to the front: its GitHub repository topped 34 000 stars this summer and briefly led the site’s daily-growth chart. Unlike heavier toolkits bolted onto LangChain, CrewAI was built from scratch in Python, so you can adopt only what you need and still keep latency low.

What Sets CrewAI Apart

Launched publicly in early 2024, CrewAI now averages close to a million monthly downloads, making it one of the fastest-growing AI libraries of 2025. The project pitches itself as the missing “people-ops” layer for LLMs: instead of coding one monolithic chatbot, you compose a crew of specialized agents, give them tools, and let them negotiate who does what.

The Dual-Core Design

| Layer | Purpose in one sentence |

| Crews | High-level orchestration—think of it as the “brain” that manages roles, goals and collaboration. |

| Flows | Event-driven, step-by-step automation—the “nervous system” that makes sure tasks fire in the right order. |

The split mirrors how real companies balance strategy and operations: leadership sets direction (Crews) while workflows and checklists keep everything on track (Flows).

Crews – Collaborative Intelligence

At the top sits a Crew object that owns the mission, budget and tempo. Inside that container you define individual agents—researchers, writers, testers—each with its own prompt, memory window and tool belt. A lightweight Process manager routes messages and resolves conflicts, while atomic Tasks spell out deliverables and due-dates. The payoff is cognitive diversity: instead of one over-prompted LLM, you get multiple viewpoints that can critique and augment one another. Developers on Reddit say that clarity makes it easier to debug and swap models compared with mega-graphs built in LangChain.

Third-Level Spotlight: The Task Lifecycle

- Draft – A human or agent proposes a task in plain English.

- Assignment – The Process engine matches it to the agent whose role and tool set best fit.

- Execution – The agent may call external APIs, write code, or spin up a sub-crew.

- Review – Peers or a QA agent critique the output before it rolls up to the Crew summary.

- Closure – Results feed into downstream Flows for storage, reporting or further action.

This lifecycle enforces accountability without over-engineering—you still write tasks as simple dictionaries, but you get hooks for logging and metrics at every hop.

Flows – Precise, Event-Driven Control

If Crews give you autonomy, Flows give you discipline. A Flow describes states, transitions and triggers in YAML or Python. Need a retry loop only when the market API is down? Add a conditional branch. Want to interleave human approval after budget-sensitive steps? Insert an Event that pauses until Slack says yes. Better yet, you can import an entire Crew as a single Flow node, so strategic reasoning remains reusable.

Core components include:

- Flow – the top-level graph with its own persistence layer;

- Events – time- or data-based triggers (“when stock drops 5%”);

- States – context snapshots that survive restarts;

- CrewSupport – a bridge that lets Flows borrow Crew agents for open-ended reasoning when rules alone fall short.

Together they solve the classic agent headache: models hallucinate, but brittle pipelines break; Crews + Flows split the difference.

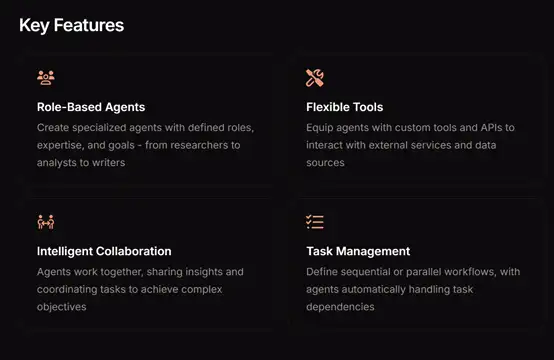

Four Technical Pillars

- Role-Based Agents – You declare expertise and voice for every agent so conversations stay on script.

- Pluggable Tools – Anything that can be wrapped as a Python function—SQL, Zapier, custom REST—is one line away from every agent.

- Shared Memory & Coordination – Agents can see each other’s notes and negotiate task hand-offs instead of working in silos.

- Adaptive Task Graphs – Parallel branches run when dependencies allow; serial branches enforce order when accuracy trumps speed.

These features echo what analysts list as CrewAI’s key differentiators versus AutoGen or Guiding-GPT. Free AI tools available on GlobalGPT.

Community and Momentum

Adoption is not just GitHub vanity metrics. The maintainers run a certification program that has logged over 100 000 developer badges—fueling a Slack with plugins, design patterns and weekly office hours. Even critics who prefer LangGraph concede CrewAI’s class definitions are “clean and easy to browse,” cutting onboarding time for side projects. KDnuggets now ranks CrewAI among the top three Python frameworks for AI agents in 2025, ahead of several VC-backed rivals. Explore GlobalGPT, an all-in-one AI platform.

Where CrewAI Fits in 2025

For solo builders, the framework is “fun for tinkering,” but may be overkill if a single-prompt RAG bot suffices. In enterprise pilots—automated K-1 tax review, e-commerce catalog cleanup—Crews shine by mirroring the org charts teams already understand. If your use case mixes unstructured reasoning with regimented approval policies, CrewAI’s dual-core model is hard to beat.

- Rapid prototyping – Ship a proof-of-concept with two agents and no Flows.

- Gradual hardening – Layer Flows as compliance requirements kick in.

- Scalable deployment – Containerize the same code for on-prem or SaaS; the repo includes Helm charts and Terraform snippets.

The Road Ahead

The maintainers have hinted at a plug-and-play reward-model interface and a visual Crew debugger, both slated for Q4 2025. They’re also working with the Open-LLM-Interop group on a stub that lets agents hot-swap between GPT-4o, Claude 3.5 and local Mixtral models without code changes. If they nail that, CrewAI could become the first agent framework embraced equally by hobbyists and Fortune 500 DevOps.