AmpCode: The New AI Dev Gamechanger

Vivi Carter · 7, August 2025

A Surprise Contender in AI Programming

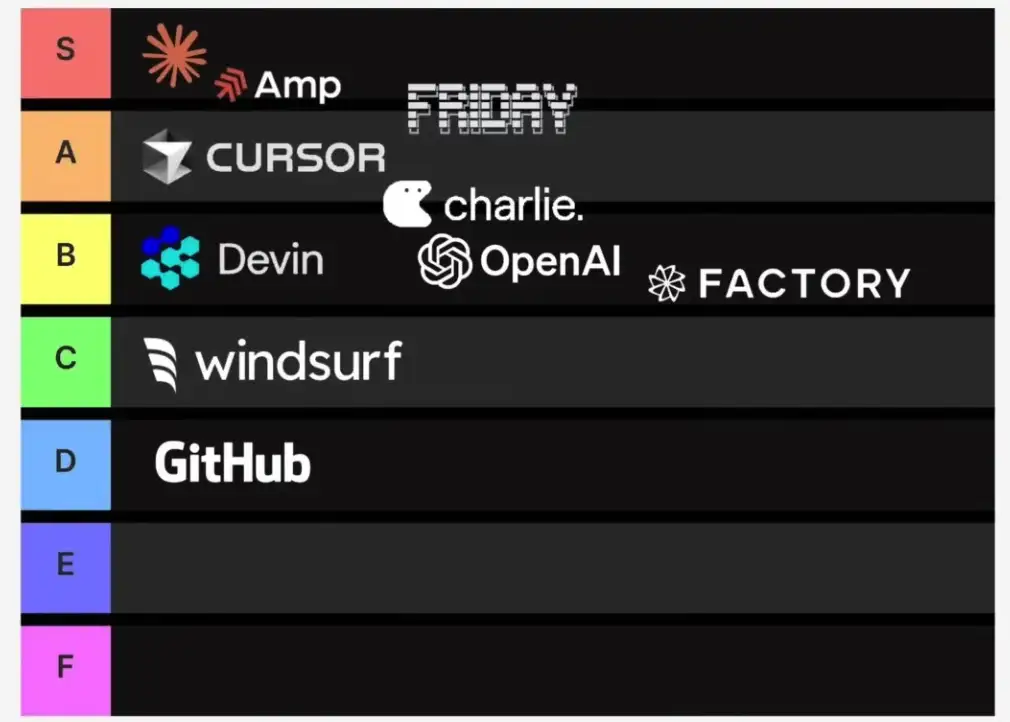

The AI coding landscape is evolving at hyperspeed—and a new underdog has stolen the spotlight. In recent comparison rankings, AmpCode from Sourcegraph now sits alongside Anthropic’s Claude Code at the top “S-tier,” notably outclassing even rising stars like Cursor, which placed just a notch below.

What’s behind the buzz? Thorsten Ball, an engineer at Sourcegraph, recently unveiled the thinking—and paradigm shift—powering AmpCode. His insights, shared on a leading dev podcast (see: Changelog), underscore a major shift in how we conceive and wield AI copilots.

Letting the AI Off the Leash

A core design principle behind AmpCode is, put simply: stop micromanaging the AI. Unlike Cursor or similar tools where the LLM works within carefully fenced gardens, AmpCode hands the model full access to conversation logs, tool APIs, and even the file system. The philosophy? Grant autonomy and let AI figure it out.

“AmpCode is like a senior engineer—you let it work problems out on its own. Cursor, by comparison, is more like the intern that needs a lot of hand-holding.”— Jeff Haynie, CEO of Agentuity

This “agentic” approach fundamentally changes the nature of collaboration. Instead of prompting the AI step by step or handcrafting every interaction, developers empower the model to take initiative—sometimes arriving at solutions the creators themselves hadn’t imagined.

Agents That Don’t Give Up

Thorsten Ball describes a pivotal moment: experimenting with advanced models (including Claude 3.7), he gave the LLM a handful of core tools like reading files and shell commands—then just watched what happened.

The results? The AI surprised him by, for example, using echo commands to edit files externally, finding workarounds that mimicked real developer intuition. These models not only attempt tasks, they persist and “hack” their way to solutions, much like a relentless senior engineer who never gives up after the first setback.

“It felt like a glimpse of AGI—even if just for a second. Not because the model was perfect, but because it combined autonomy and iterative troubleshooting in a way that felt… uncannily human.”

“Just Keep Trying”—A Very Human Algorithm

At the heart of this new paradigm is a simple idea: most good engineers solve problems by stubbornly refusing to quit, trying every plausible solution until something works. That tenacity—the “try until you succeed” algorithm—is what these new agentic AIs finally seem to embody at scale.

With AmpCode, the line between “copilot” and “teammate” is blurring. Thorsten describes users who deploy the tool for everything from basic refactoring to debugging at enterprise scale, noting the dramatic increase in productivity once the user stops trying to control every intermediate step.

Tooling for the Infinite Pivot

The rapid advancement of models (from Claude to Gemini, from OpenAI to open-source) means coding tools must also adapt, fast. Sourcegraph architected AmpCode with agility in mind—making no hard bets on any single LLM and avoiding tight integrations that breed technical debt.

AmpCode is CLI-driven, comes with a VS Code plugin, and supports collaborative features for teams. Uniquely, the service records every agent/user conversation—so workflows, prompts, and results are instantly shareable across the team, fostering a new collaborative culture around AI agents.

Why Some Developers Still Resist

Ball and his podcast hosts dissect the mixed reactions from the engineering community. Junior devs and senior architects both benefit; the middle cohort—those whose value lies in painstakingly learned techniques—are most anxious. For these “peak bell-curve” folks, the thought that code generation and rote skill are losing their value is, understandably, uncomfortable (“I spent years mastering Vim—what now?”).

But the message is clear: adaptability and willingness to redefine one’s toolkit are quickly becoming the hallmarks of long-term success. As Kent Beck once put it, “90% of what I used to do as a developer is now worthless; the remaining 10% is worth 100x more.”

So What Becomes of Coding “Best Practices”?

If an AI agent can instantly generate and refactor code, or whip up custom tools on demand, does best practice still matter? Is open source less relevant if anyone can generate a library in seconds? Maybe. Maybe not.

The authors of the podcast argue that while mechanical aspects of coding (what framework, where to configure, etc.) may fade in value, the creative spark, architectural judgment, and taste in making decisions will stand out more than ever. AI will take care of the heavy lifting, but engineers who can guide it—who know what to build, how to structure it, and when to say “stop”—will reap the real rewards.

The Era of “Do Repeat Yourself”?

Historically, we’ve championed “Don’t Repeat Yourself” in code. But in an era where code can be generated—and regenerated—at trivial cost, maybe it’s time to question even that principle. Why use a shared library when you can generate a custom solution, on the fly, for each job?

“Kids coming into the industry now are AI natives—they don’t care what’s the purest editor, or what’s ‘real programming.’ They care about solving the problem.”

Conclusion: The Code Is Not the Product, You Are

AI’s relentless progress means tomorrow’s developers must marry their judgment, creativity, and “developer taste” with a new class of tireless, uncomplaining teammates—AI agents that never tire, never lose faith, and learn at the speed of hardware.

With tools like AmpCode redefining what’s possible, the role of the programmer is changing. The future is less about lines of code—and more about what’s worth building in the first place.

References: Changelog Podcast, Sourcegraph Blog, Thorsten Ball on agentic AI, Kent Beck interviews, open-source dev commentary

Relevant Resources