Comparing GPT-5 and GPT-4o: Shaping the Next Era of Generative AI

In recent years, generative AI models have captured the imagination of both enthusiasts and professionals, evolving at a breakneck pace. The launches of OpenAI’s GPT-4o in 2024 and, more recently, GPT-5, have signaled critical turning points in what these systems can accomplish. As expectations rise for more productive, creative, and safe AI, understanding the differences and implications of these two milestone models can help users and developers decide how to navigate the rapidly changing landscape.

Model Architecture and Technical Progress

GPT-4o, unveiled in May 2024, marked a major shift in multimodal AI by integrating text, voice, and vision into a single, unified model. Its ability to respond instantly with natural voice inflection and to interpret complex images—whether diagrams, screenshots, or photos—set it apart from previous iterations. The “o” indicates “omni,” pointing to its natural proficiency across varied modes of input (OpenAI Official Release).

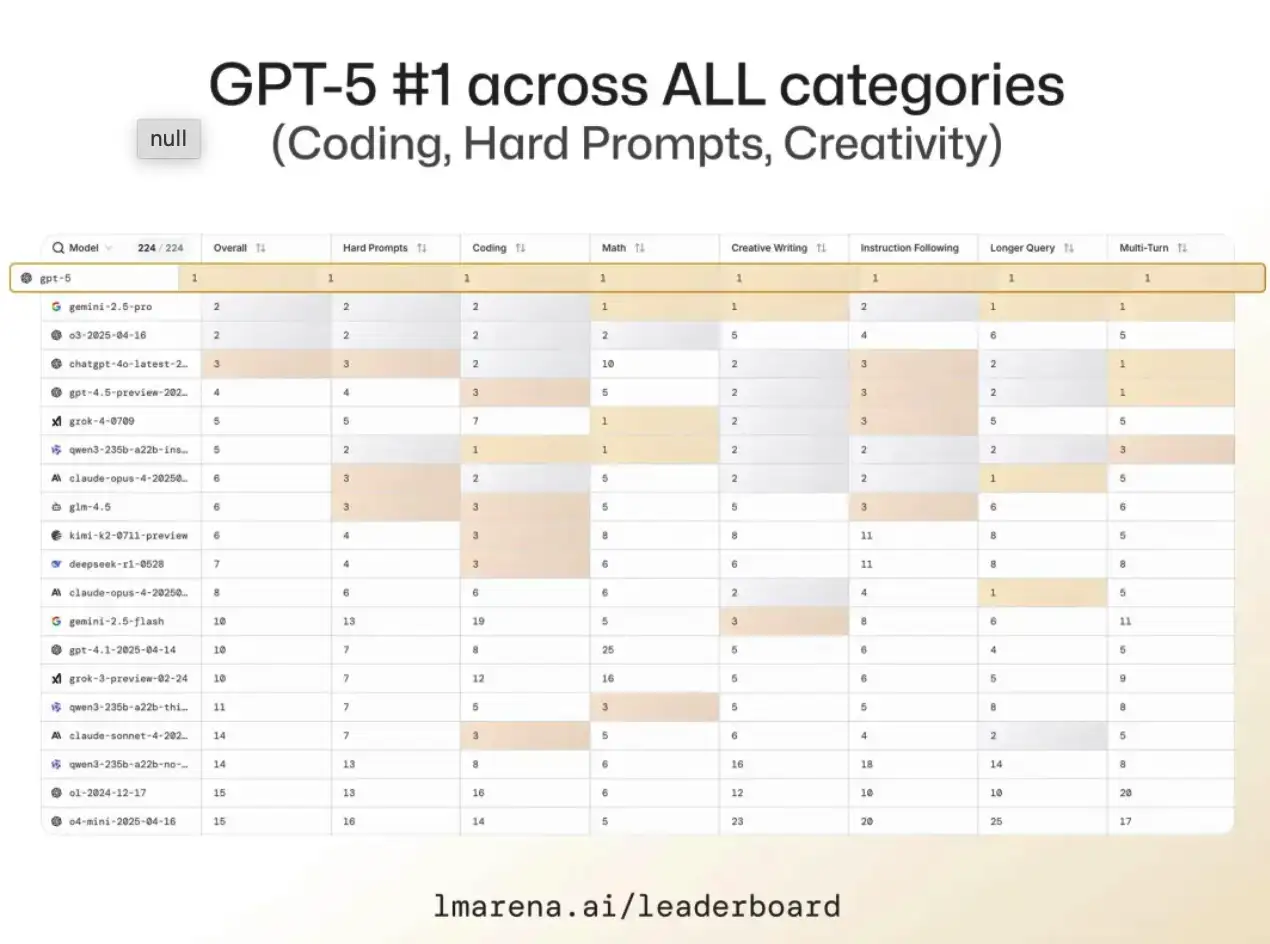

GPT-5, on the other hand, builds on these multimodal foundations but pushes boundaries in several new directions. Its architecture incorporates more advanced contextual modeling, handling longer conversations and richer intent detection. With expanded memory and reasoning skills, GPT-5 is able to follow multi-step instructions, maintain contextual awareness over extended interactions, and synthesize information from even more diverse sources. Initial benchmarks show improved performance on coding, reasoning, and complex synthesis tasks, as well as a finer grasp of abstract and intuitive queries (TechCrunch coverage).

User Experience: Interaction and Personalization

When GPT-4o debuted, its real-time voice mode was considered a leap forward. Conversations felt less robotic, and the ability to “see” through cameras or uploads made interactions more seamless. For many, it represented the closest digital approximation of genuine human dialogue—responding with the right tone, emotional nuance, and contextually relevant information.

GPT-5 extends these capabilities by introducing adaptive persona modeling. The model can dynamically adjust its style, tone, and depth according to user preferences and historical interactions. For users seeking not just information but tailored guidance—whether for learning, creative collaboration, or technical support—GPT-5 offers a noticeably more personalized experience. Furthermore, its native cross-app integration means GPT-5 can support workflows spanning productivity suites, design tools, and web platforms, reducing friction for users who rely on AI to augment daily life.

Performance: Reasoning and Creativity

GPT-4o demonstrated outstanding reasoning for day-to-day problem solving, code generation, and quick research. Its mastery of “multimodal” queries—such as describing the contents of a photo or walking through the logic in a spreadsheet—gave it broad utility.

GPT-5, however, shifts toward genuine creative and analytical synthesis. Instead of simply generating text, it can reason through complex challenges, recognize subtleties in user intent, and even co-create long-form projects. In creative writing, code, or visual design, GPT-5 sustains narrative or style far more effectively, recalling context from previous exchanges and weaving together nuanced responses. Developers report a fivefold decrease in errors due to out-of-context replies, while artists appreciate more robust visual interpretation in early beta tests (ZDNet interview with developers).

Ethics, Safety, and Accessibility

Safety and alignment are critical dimensions for generative AI. GPT-4o incorporated new safety rails and moderation tools in response to concerns over hallucinations and inappropriate content, introducing real-time feedback for sensitive queries.

GPT-5 goes further, employing reinforced learning from both official and community feedback to minimize the risk of biased outputs and harmful content. The latest version includes transparent reporting for users and creators, along with more customizable moderation protocols for organizations. Accessibility also improved, with GPT-5 supporting more languages and dialects, and offering speech synthesis for those with visual impairments.

Use Cases and Impact

GPT-4o made a splash in content creation, customer support, coding help, and creative collaboration. It expanded the possibilities for multimodal learning, interactive gaming, and personal productivity.

GPT-5’s broader and deeper capabilities amplify these use cases and add new ones. It enables advanced research synthesis for academics, consultative help for small businesses, and real-time design for creators. The improved integration with XR (extended reality) and IoT (internet of things) devices hints at a future where AI actively shapes and responds to our environments. The impact is already visible: companies are accelerating prototyping and onboarding, and individuals are leveraging more automated workflows than ever.

Looking Forward: Challenges and Opportunities

While the benefits of GPT-5 are clear in terms of intelligence and usability, its release has renewed debates about privacy, job automation, and ethical transparency. As AI systems become increasingly embedded in personal and professional spheres, creators and users alike must remain vigilant about how these tools are trained, deployed, and monitored.

Every leap in ability brings both opportunity and responsibility. For those aged 20 to 50—working professionals, creators, entrepreneurs—the challenge is to master the tools rather than be mastered by them. Staying informed and curious will be essential as AI’s next era unfolds.

Conclusion

Both GPT-4o and GPT-5 represent significant steps forward in generative AI, with GPT-5 clearly setting a new standard for contextual intelligence, creative co-working, and adaptive personalization. As technology continues to blur the boundaries between human and machine, the coming years will demand thoughtful engagement and proactive adaptation from all who use and develop these systems.

References:

OpenAI Blog, TechCrunch, ZDNet, product documentation and developer interviews from 2024-2025.