Claude Code's Rise: Anthropic Re-engineers Path to AGI

.webp)

Eric Walker · 13, July 2025

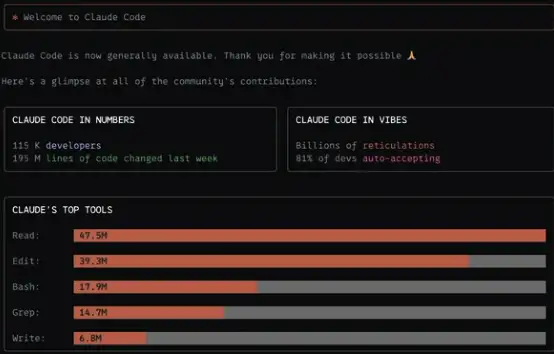

When Anthropic quietly unveiled Claude Code this spring, few expected it to snowball into a phenomenon. Yet in just four months the tool has pulled in 115 000 developers and is now touching an eye-popping 195 million lines of code every single week. By back-of-the-envelope math, that usage translates into an annual run-rate of roughly $130 million, or more than $1 000 in revenue per developer per year.

Developer-turned-investor Deedy captured the mood on X: “Claude Code Opus is a junior software engineer.” It’s not hyperbole. While most AI coding aids still focus on autocompleting snippets, Claude Code already handles the day-to-day grunt work a real human junior would tackle—reading unfamiliar repositories, refactoring stubborn modules, and drafting entire pull requests.

The Double-Edged Sword of Automation

Early adopters love the speed boost but bristle at the occasional overconfidence. One engineer confessed that last week’s 195 million-line figure needs “deduplication” because Claude sometimes fixes its own earlier fixes—gobbling extra context tokens and time along the way. With the Opus tier capped at about $200 per month, the cash pain stays tolerable, yet the wasted review cycles still sting.

That ambivalence shows up in countless online anecdotes:

- “I wish my team budgeted only $1 000 a year for this—turns out I’m spending way more,” joked one team lead.

- Another user described the model as “an engineer who never sleeps, works in five shells at once, and already knows your whole codebase.”

Claude isn’t just accelerating work; it’s changing it. Teams now debate whether to pair-program with an AI they don’t fully trust or to let it loose and manually audit the flood of proposed changes afterward.

Free Claude available on GlobalGPT, an all-in-one AI platform.

OpenAI's API available at OpenAI developer platform.

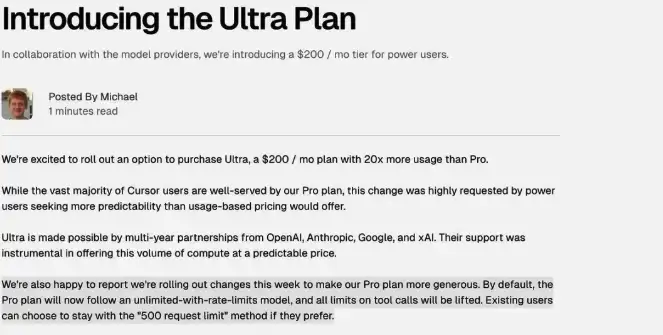

Cursor’s Pricing Stumble Opens the Door Wider

On the opposite end of the hype curve sits Cursor, the venture-backed editor once favored for its slick dev-experience and generous API allowances. Cursor advertised that a $20 subscription effectively unlocked $100 worth of Anthropic API credits—until the economics snapped.

Three weeks of unexpected overages forced the company to issue a mea culpa, refund surprise charges, and throttle usage. Developers labeled the move a betrayal of trust. Screenshots of Cursor’s hastily edited blog post—original version promising “unlimited” access, updated version dotted with caveats—made the rounds on social feeds, fanning the outrage.

The API-Reseller Squeeze

Cursor’s plight exposes a structural problem: middlemen who pay list price for model tokens can’t out-discount the companies that own the models. Anthropic can afford to subsidize heavy users on its Max plan because its marginal cost is electricity and silicon. Cursor, by contrast, hands over real dollars for every prompt. As one commentator quipped, “You can’t beat Claude by selling Claude.”

The episode is a cautionary tale for any startup whose value proposition is little more than a UX wrapper around someone else’s AI.

Losing Ground: Developers Stampede Toward Claude

With Cursor wobbling, switching costs have all but evaporated. Teams report migrating entire workflows to Claude Code + Opus 4 in less than a week. The refrain is simple: better context window, faster token throughput, and—critically—pricing straight from the source.

Even Cursor’s own talent pipeline is backfiring. Rumor has it the company lured away the very engineer who helped build Claude Code, assuming insider knowledge would guarantee an edge. But deep pockets and proprietary models trump secondhand insight.

Parallel Power: Eight Tasks at Once

Under the hood, Claude Code’s secret weapon is parallelism. Developers have shown screenshots of eight simultaneous shells churning through independent tasks—editing multiple files, running unit tests, and scanning documentation all in parallel.

Think of it as hiring not one but a team of tireless juniors who share a hive mind. In practical terms, that means:

- Bulk refactors across dozens of microservices without pausing for coffee.

- Context-aware jumps between entirely different codebases.

- Test-generation and debugging that runs concurrently with feature work.

For startups, spinning up a few Max accounts now beats scaling a conventional engineering org—at least for well-defined maintenance and integration work.

The Road From Junior to Senior Agent

Where does the curve point next? Observers forecast a six-to-eight-month glide path from “junior” to “senior” agent status. The mechanism is a virtuous feedback loop: millions of live coding sessions feed reinforcement-learning pipelines fine-tuned on real pull-request outcomes. Each accepted diff teaches the model implicit style guides, architectural conventions, and unspoken business rules.

From a CFO’s vantage point, the ROI math is stunning. If Claude saves even five developer-hours per month, the $1 000 annual spend pays for itself. Multiply that across hundreds of thousands of seats and you arrive at SaaS-giant territory—before factoring in organizational integrations or memory features Anthropic hasn’t even monetized yet.

Spotting the Storm Clouds

Not everyone is cheering. Security-minded engineers warn of a single point of injection: compromise one centralized AI and you could slip backdoors into thousands of private repos at once. The more developers delegate code writing, the bigger the blast radius if something goes wrong.

That specter won’t halt adoption, but it will force new layers of verification—AI reviewing AI, humans spot-checking both, and continuous monitoring for anomalous diffs. Ironically, the very tools that create the risk may also supply the remedy.

Why Coding Is the Fast Lane to AGI

Anthropic’s bet is bold: master code and you master creation in the digital realm. Programming isn’t merely another vertical like copywriting or image generation; it’s the meta-tool that builds all other tools. If an AI agent can reason over sprawling codebases, track dependencies, design architectures, and deploy changes safely, it inches closer to the adaptability we associate with general intelligence.

By securing the loyalty of 115 000 engineers and rewriting nearly 200 million lines every week, Claude Code has carved out a commanding beachhead on that path. Each merged pull request is both a product improvement for the customer and a training datapoint for Anthropic’s next-gen models.

In the race toward artificial general intelligence, there are many possible roads. Programming looks increasingly like the express lane—and Claude Code is already barreling down it.